Performance-hungry developers may want to pay attention. A new contender has entered the caching space, aiming to set the pace: Pogocache.

This new high-performance open-source caching system, written from scratch in C with a focus on low latency and CPU efficiency, launched its first 1.0 release just a month ago and has already garnered 1,6K stars on GitHub. And for good reason.

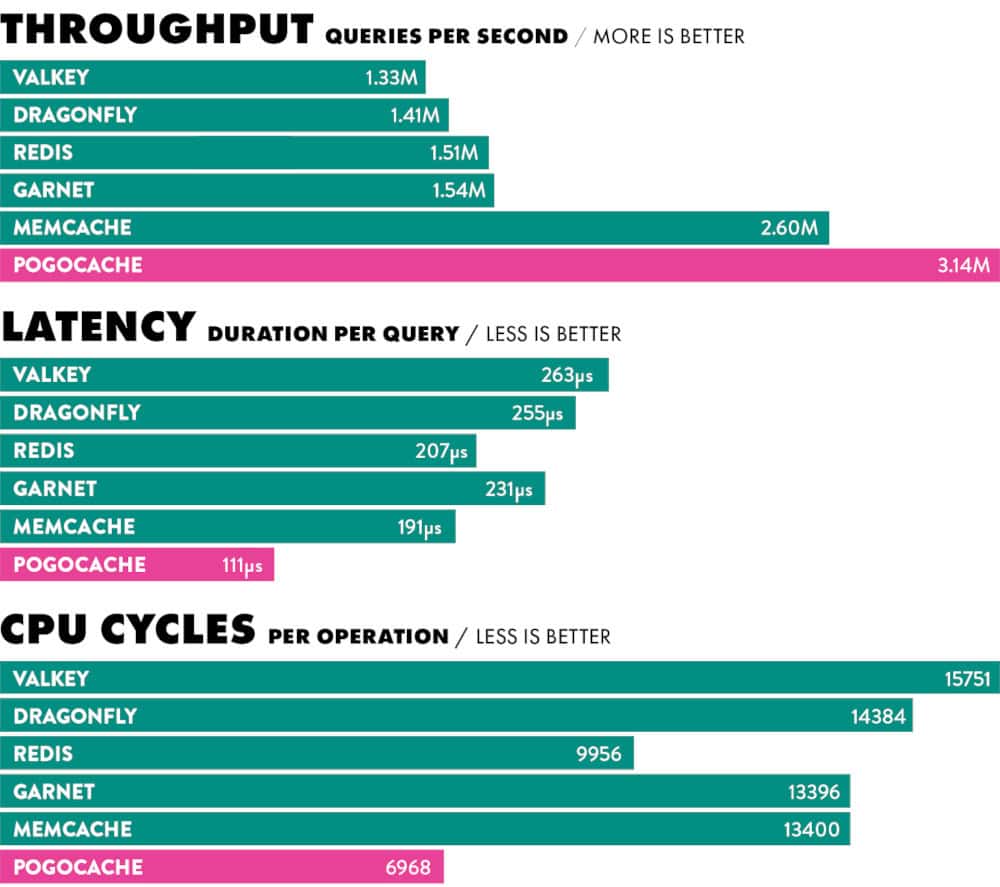

In benchmarks shared by the developer, Pogocache consistently outperforms long-established tools like Redis, Memcache, Valkey, Dragonfly, and Garnet. The gains are evident in both throughput and latency tests, indicating that it can handle more requests per second while maintaining low response times.

But what sets Pogocache apart isn’t just the performance numbers. It also supports multiple wire protocols, including Memcache, Redis (RESP/Valkey), HTTP, and even PostgreSQL.

In other words, it’s easy to integrate it into existing workflows without requiring the rewriting of client code. In practice, a developer can point curl, psql, or a Redis client at Pogocache and start caching data immediately.

Impressed by the numbers above? Just keep reading, because flexibility doesn’t end there. Pogocache can run as a standalone server, but it can also be embedded directly into applications through a single C file. In that mode, it bypasses the networking stack entirely and pushes past the impressive 100 million operations per second on modern hardware.

So, what’s the secret behind these hard-to-believe numbers? All comes from its internal design. Pogocache uses a highly sharded hashmap—thousands of shards in a typical setup—combined with Robin Hood hashing, a collision-resolution strategy used in open-addressed hash tables.

According to devs, this approach minimizes contention between threads and maintains efficient memory access patterns.

The project runs on 64-bit Linux and macOS (with a Docker image also available) and is licensed under AGPL-3.0. While it’s still in its infancy, releases are coming quickly. As I mentioned earlier, version 1.0 was released in late July 2025, and version 1.1 was introduced just three days ago, featuring background expiration sweeps for cached keys.

So, what can I say in conclusion? While it’s too early to predict whether Pogocache will achieve broad adoption, the project clearly positions itself as more than just another Redis or Memcache alternative.

One thing is for sure—for developers already working with caching systems—and especially for those who care about squeezing out every last drop of performance—this newcomer has a lot to offer.

For more information, visit the project’s GitHub page.