AI has, without a doubt, become the hottest tech topic in recent years. Some folks are excited about its endless possibilities, while others worry it’s pushing us dangerously close to a sci-fi-style doomsday. But one thing’s clear—AI is here and getting smarter every day.

Its uses are practically limitless. It can help you whip up a 5-minute omelette—or, get this—it can scan massive amounts of source code and spot vulnerabilities. Yep, even in something as complex as the Linux kernel. So, what’s going on here? Let’s break it down.

On May 22, 2025, security researcher Sean Heelan shared a compelling account of how ChatGPT’s latest language model, o3, uncovered a critical remote zeroday vulnerability in the Linux kernel’s SMB implementation, designated as CVE-2025-37899.

Heelan’s investigation centered on ksmbd, the Linux kernel server responsible for implementing the SMB3 protocol in kernel space for network file sharing.

Initially embarking on a manual audit of ksmbd to benchmark o3’s potential, Heelan quickly realized that the model was able to autonomously identify a complex use-after-free vulnerability in the handler for the SMB ‘logoff’ command—an issue Heelan himself had not previously detected.

What makes this finding particularly remarkable is the vulnerability’s nature, which involves concurrency and shared objects accessed across multiple threads.

The vulnerability occurs because one thread frees an object while another thread may still access it without proper synchronization, leading to use-after-free conditions that could allow kernel memory corruption and arbitrary code execution.

Before this breakthrough, Heelan used another vulnerability, CVE-2025-37778, as a benchmark. This earlier flaw, known as the “Kerberos authentication vulnerability,” is also a use-after-free bug triggered during Kerberos authentication in session setup requests.

While this vulnerability is remote and impactful, it is relatively contained, requiring the analysis of about 3,300 lines of kernel code. Heelan’s method involved feeding o3 with code from specific SMB command handlers and related functions—carefully curated to remain within the model’s token limits.

The results were telling: across multiple runs, o3 identified the Kerberos authentication vulnerability significantly more often than previous models like Claude Sonnet 3.7, doubling or tripling detection rates. More impressively, OpenAI’s o3 produced bug reports that read more like those from human experts—concise, focused, and easier to follow, although sometimes at the expense of detail.

Pushing further, Heelan expanded the scope by providing o3 with a larger codebase encompassing all SMB command handlers—amounting to roughly 12,000 lines of code.

Despite a natural drop in performance due to scale, o3 still managed to pinpoint the Kerberos vulnerability. More intriguingly, it uncovered a previously unknown vulnerability: the very use-after-free bug in the session logoff handler that later became CVE-2025-37899.

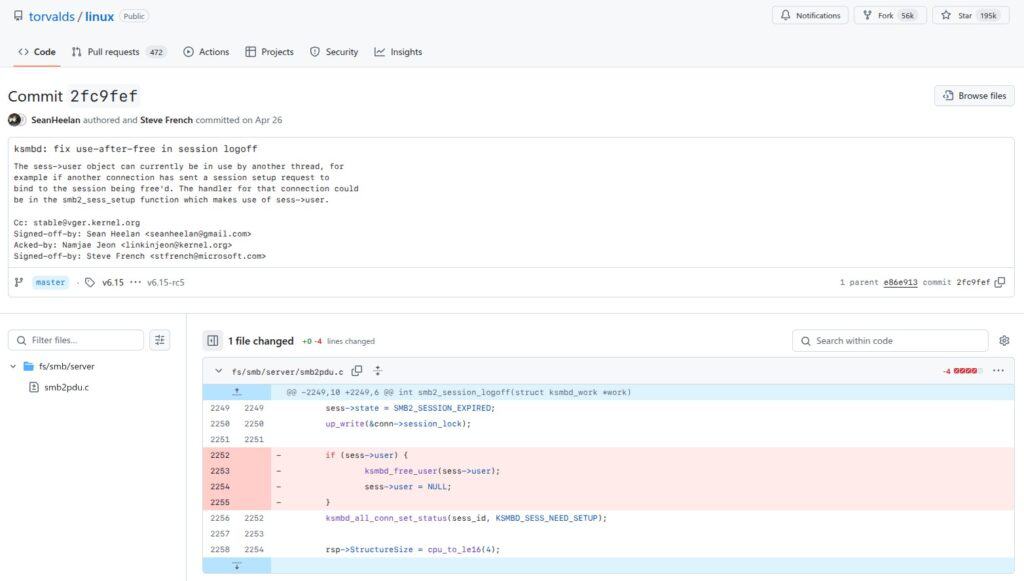

This new vulnerability exploits a race condition between threads accessing the sess->user structure. When one thread processes a LOGOFF command and frees this structure without adequate synchronization, other threads may still dereference the freed pointer, causing memory corruption or denial of service.

Heelan’s analysis revealed that merely setting the pointer to NULL after freeing it was insufficient to prevent this bug due to SMB protocol features allowing multiple connections to bind to the same session.

Of course, he immediately reported the vulnerability. Upstream response was really fast, and the patches merged for every still-maintained kernel branch.

So, the vulnerability is resolved in the kernel source; now it’s just a matter of pulling the update from your distro. But the focus of the topic is different, as you guessed.

Without claiming to be 100% correct, this might be the first confirmed real-world case where AI helped uncover and fix a flaw in the Linux kernel—a true precedent, no matter how you look at it. An example of synergy between human insight and machine intelligence.

And honestly, this could soon become the norm with the way things are going. Which, in my view, is totally fine—and even expected.

Of course, AI is not flawless and can still produce errors (for now). However, there’s no denying its incredible ability to carry out complex logical operations and reason through outcomes in ways that go far beyond what any one person could do.

That alone gives us good reason to believe we’re standing at the edge of a new era—one that could mark the next big leap in human technological evolution. And honestly, I don’t think we’ll have to wait long to see if that’s true.

Until then, if you’re curious and want to dive deeper into the technical side of this specific case, be sure to check out Heelan’s blog post.

Given that CURL banned AI bug reports because of the sheer scale of meaningless dross that was submitted, this one is clearly a more nuanced approach that is probably useful.

Really nice article – thank you, Bobby! I’m somewhat ambivalent about a lot of (LLM-based) AI, but this is undeniably cool 🙂